- Home

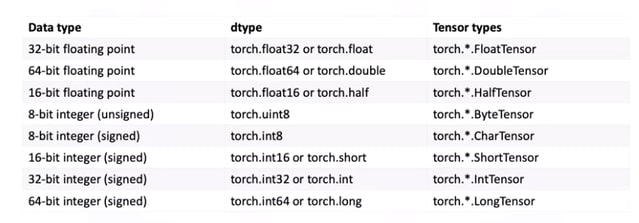

- dtydtpe bras

- DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

4.7 (668) · $ 8.99 · In stock

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

TypeError: only floating-point types are supported as the default

Wrong gradients when using DistributedDataParallel and autograd

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

pytorch-distributed-training/dataparallel.py at master · rentainhe

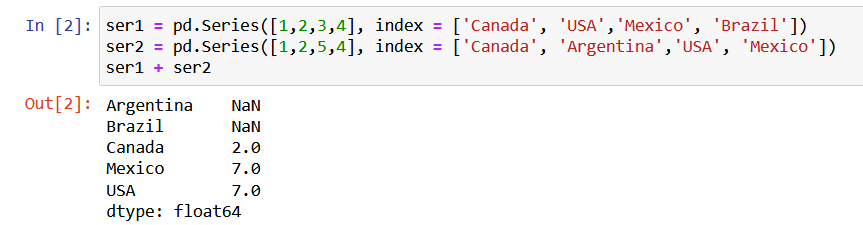

Introduction to Tensors in Pytorch #1

Achieving FP32 Accuracy for INT8 Inference Using Quantization

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

Achieving FP32 Accuracy for INT8 Inference Using Quantization

RuntimeError: Only Tensors of floating point and complex dtype can

nn.DataParallel ignores requires_grad setting when running · Issue

How distributed training works in Pytorch: distributed data

Distributed Data Parallel and Its Pytorch Example

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

Inplace error if DistributedDataParallel module that contains a